Background

When I prepare classes, I spend a significant amount of time (maybe 20-30% of the whole process) finding or constructing **perfect** language examples or images that I can use for my Japanese language class. A classic example is images for the unit on counters (e.g., 一枚、二枚、一冊、二冊 etc.), where an instructor has to find or create images for different numbers of objects and different shapes. It's a lot harder than you think to find an image that fits the exact description (try finding an image of 8 pieces of paper, for example).

So I became very curious what AI-based image generation tools can offer and wanted to see how useful they can be in designing images, text, and audio that can be used for language instruction.

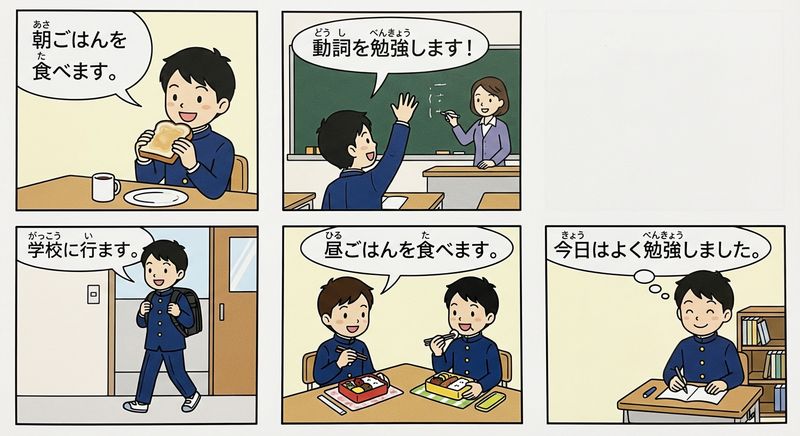

Starting with images, I have tried to create several different types of instructional materials with AI. Below are some example resources generated by AI and the prompts that I used to design these materials.

OpenAI's DALL-E (https://openai.com/dall-e-3), Stable Diffusion (https://auth.stability.ai), Stability AI, and Midjourney (https://www.midjourney.com/) released text-to-image models in 2023. Each model had its own strengths (and weaknesses), but overall they successfully produced target objects (such as "books" and "apples") as photorealistic images. The generated images were not consistent, especially when I provided detailed prompts -- for example, the image was not accurate when I asked to generate "five apples and two bananas on the table."

The cost was another challenge. The cost of generating images and videos with AI is usually significantly higher than that for text. For example, the latest models in 2025 (Imagen-4, etc.) cost about $0.20-$0.40 per image. If we need to generate dozens of images regularly, the cost is a prohibitive factor for instructional use.

A huge amount of criticism of text-to-image technology came from creative professionals such as artists, photographers, and videographers. While I personally have no objection to using AI-generated images, I agree with the need for ethical practices in using AI-generated images (or AI in general). One suggested practice is to always disclose that an image is AI-generated when using AI-generated images. Unfortunately, there has been no consensus on the ethical approach to AI-generated images. While some believe disclosure is good enough, others feel any use of AI-generated images is offensive. This is a major hurdle for anyone who intends to use AI for their classroom instruction.

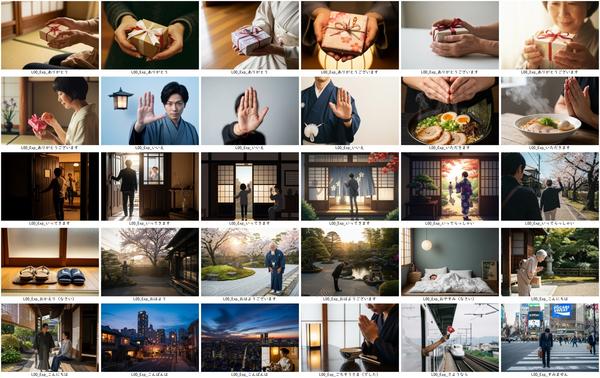

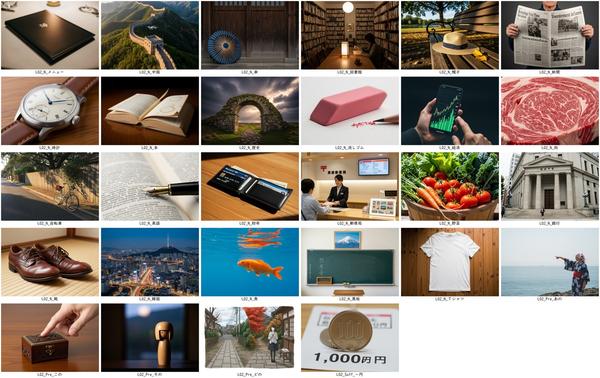

Below are the first set of images that I generated with Stability AI in 2023. The prompt for these models was rather simple since the image went wild when I provided a too long prompt. If I want to have an image of books with these models, the overall quality was better with simple commands such as "books" or "books on the desk" rather than a long detailed description. An example prompt for each image is also presented.

Sample Command (StabilityAI)

A realistic photo of following object for instructional materials. Simplify the image so that it highlights the object: [A SINGLE WORD TARGET (such as "teacher" and "apple")] [INFO] Generated image ....

🖌️ Images

See more examples in my Amazon S3 folder: https://t-nagano.link/FunWithAI-StabilityAI

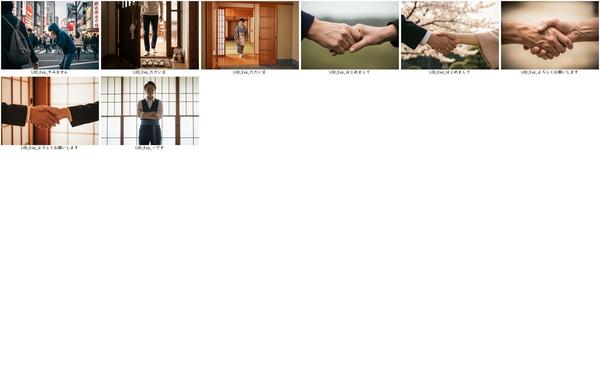

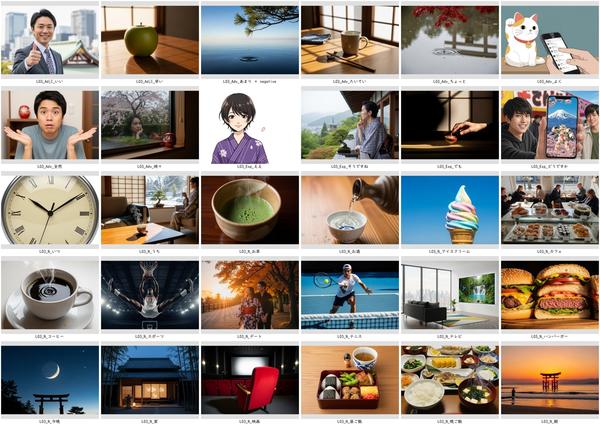

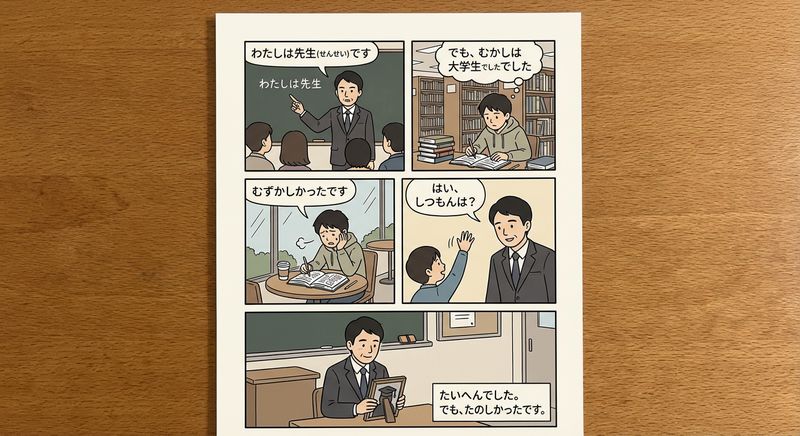

Google's Imagen 3 (https://developers.googleblog.com/imagen-3-arrives-in-the-gemini-api/) was probably the most advanced AI image generation tool in 2024. Imagen 3 was particularly good at understanding the instructions (prompts) written in natural language. It claims to be able to generate English text (such as a sign on a store), but it often produced errors. It never produced correct Japanese text either. The prompt must be in English -- if I use a Japanese word in the prompt, it often produces erratic images (you can see some of them in the sample images below).

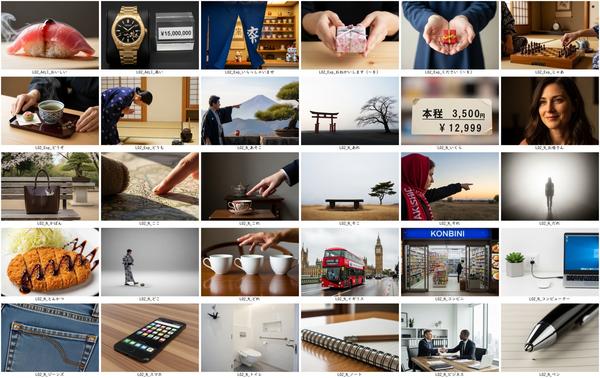

The price for image generation became accessible in the range of $0.1-$0.2 in this model. In late 2024, I generated many images that can be used for our Japaense language courses. The first set of images are for the vocabulary items in the Genki textbooks. Below are the prompts and images that it generated.

Sample Prompt (Imagen3)

Create a photorealistic image without any text that illustrates the following expression for learners of Japanese.: teacher or professor. [INFO] Generated image ....

🖌️ Images (examples)

The original images are available in my Amazon S3 folder: https://t-nagano.link/FunWithAI-Imagen3-1

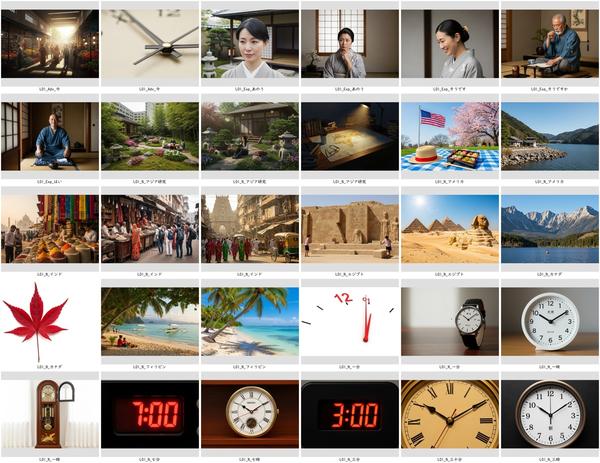

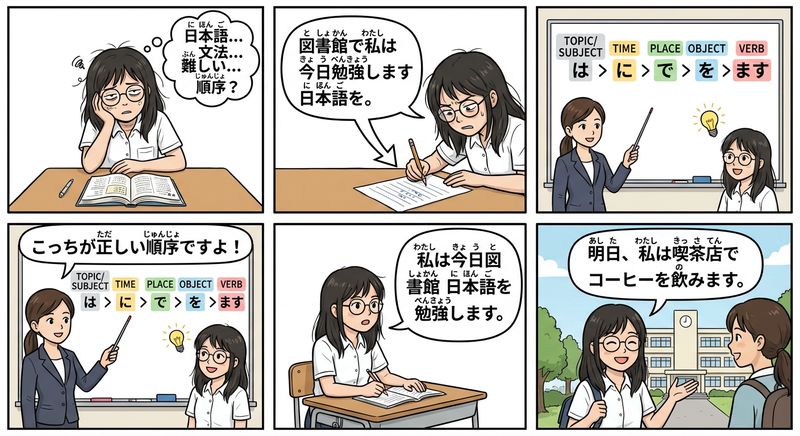

In late 2024, DeepSeek (https://www.deepseek.com) started to offer an extremely affordable API access to their reasoning model (DeepSeek R1), which turned out to be extremely good at Japanese language resources (see below for more information about this). I tried using a combination of DeepSeek R1 and Imagen3 to make more complex images. Some examples are

- For the unit of invitation 「ませんか」 in Chapter 3, I made images for the following expressions:

- コーヒーを飲みませんか。

- 映画を見ませんか。

- セントラルパークで散歩しませんか

- 図書館で勉強しませんか。

- ニューヨークの美術館へ行きませんか。 ... etc.

The process involved two procedures:

- Creating a list of practice sentences for the target grammar (e.g., 「ませんか」) with DeepSeek (see below for more information about this)

- Generating images for these example sentences with Imagen3

Since Imagen3 does not perform very well with Japaense prompts, I had to create English translations for the Japanese practice sentences. I noticed that Imagen3 did not generate any meaningful Japaense text, so I have added an instruction not to use any text in the image (but the model still generated text sometimes.). People from the Asian background were overrepresented in images, so I also added an instruction to diversity the people's backgrounds.

Sample Prompt (DeepSeek R1)

「~ませんか (e.g., コーヒーを飲みませんか。)」を使った例文を、日本語の教科書「げんき」を使ってニューヨークのラガーディアコミュニティーカレッジで日本語を学習している学生のために50個作ってください。「げんき」のCh.1-Ch.03までの単語を使うようにして、その範囲内の単語だけの例文を作るようにしてください。トピックはニューヨークに関する場所やイベントを時折、使うようにしてください。出力はデータのみにして、「日本語の例文; 英語の訳」形式で出して、解説はつけないでください。 コーヒーを飲みませんか。; Would you like to drink coffee? 映画を見ませんか。; Would you like to watch a movie? セントラルパークで散歩しませんか。; Would you like to take a walk in Central Park? 図書館で勉強しませんか。; Would you like to study at the library? ニューヨークの美術館へ行きませんか。; Would you like to go to a museum in New York? 昼ご飯を食べませんか。; Would you like to eat lunch? 紅茶を飲みませんか。; Would you like to drink tea? 友達に会いませんか。; Would you like to meet a friend? タイムズスクエアを見ませんか。; Would you like to see Times Square? 音楽を聞きませんか。; Would you like to listen to music? 本を読みませんか。; Would you like to read a book? 公園でピクニックしませんか。; Would you like to have a picnic in the park? ニューヨークのパレードを見ませんか。; Would you like to watch a parade in New York? ... [snap]

A note about the diverse background was added to the prompt. The skewed representation of people in Imagen3 became a major concern (i.e., minorities were not represented in images). When the target sentence included "Japanese," people from Asian backgrounds were overrepresented. For example, many Japanese people appear for the sentence "I go to the Japanese class," which does not make sense since those students should not look like Japanese. AI-generated nudity became an issue, and Imagen3 later added a very strong filter, which blocked a certain set of words such as "student", "young", and "girls." The prompts, therefore, had to be modified -- for example, I had to use "a classroom packed with people" instead of "a classroom packed with students"

A note about text/characters was also added since Imagen3 was never able to produce correct text in their images. About 50-70% of English text was wrong and almost none of Japanese text was correct.

Sample Prompt (Imagen3)

Make a realistic photo for the following sentence. Make sure to represent a diverse background. Do not include any text or written characters. Would you like to drink coffee? [INFO] Generated image ....

🖌️ Images

The original images are available in my Amazon S3 folder: https://t-nagano.link/FunWithAI-Imagen3-2

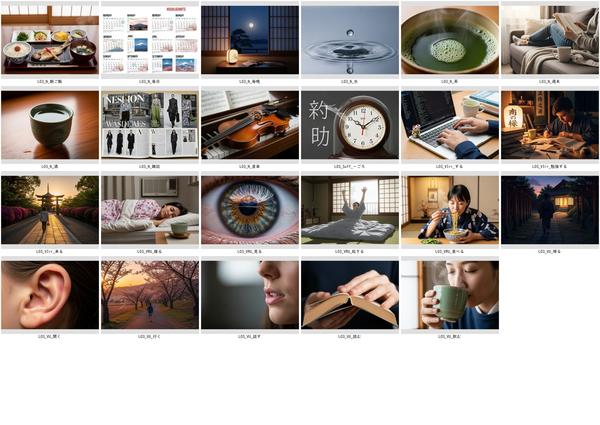

Google's Nano Banana (https://blog.google/technology/ai/nano-banana-pro/) is an upgrade of Imagen 4 (so it is officially Imagen 4). Imagen 3 already generated images of superb quality but had some limitations, most of which have been addressed in Nano Banana. For example, Imagen 3 sometimes made realistic but illogical renderings of human hands (three hands, etc.) and almost always generated erratic text (Japanese text was 100% inaccurate in Imagen 3). In Nano Banana, these errors are mostly eliminated.

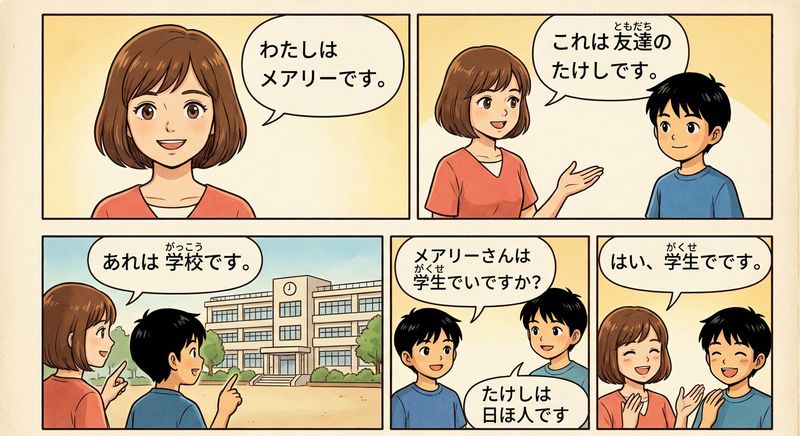

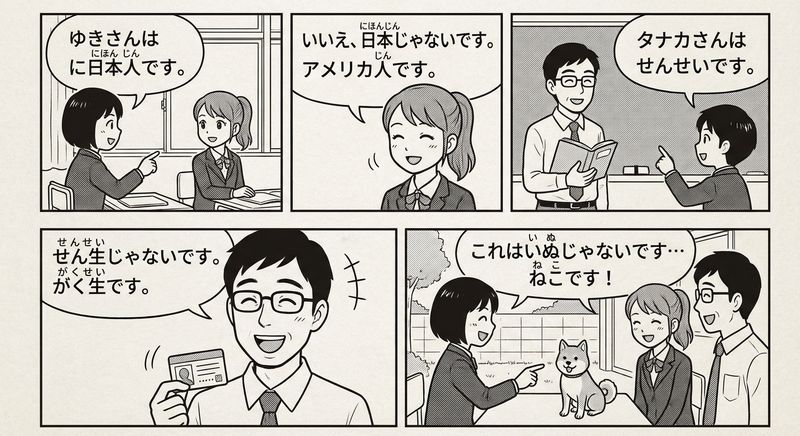

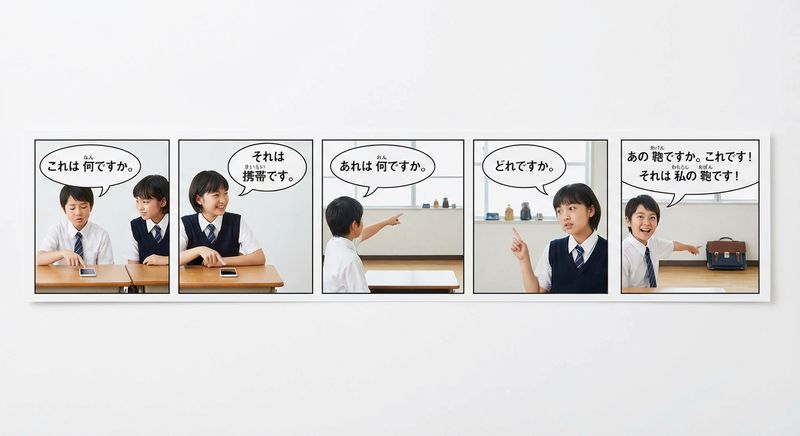

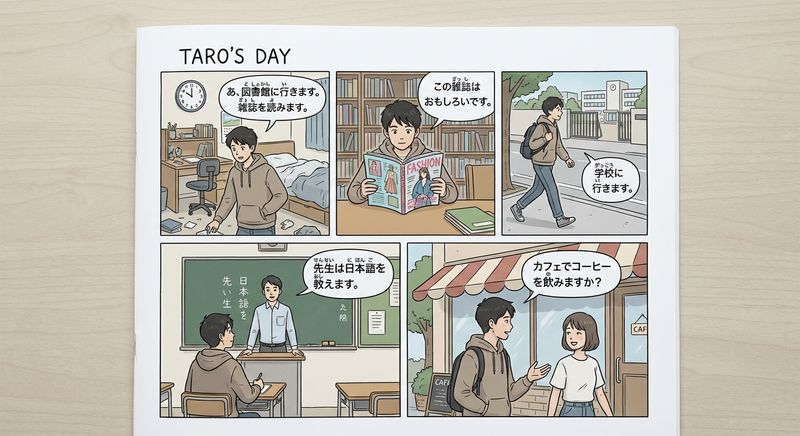

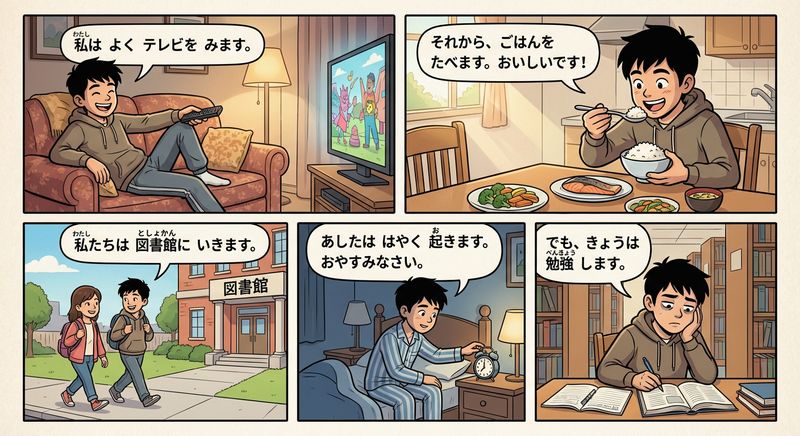

Nano Banana can accept a rather long prompt to generate an image and has made it possible to program a multi-layer process to generate images. In my experiment, I used the following procedures to generate images with Nano Banana.

- Generate an image prompt with DeepSeek (very cheap and fairly accurate).

- Review the generated prompt and make corrections/adjustments.

- Send the revised prompt to Nano Banana.

Below is an example of image prompt generation with DeepSeek.

Sample Prompt (Deep Seek)

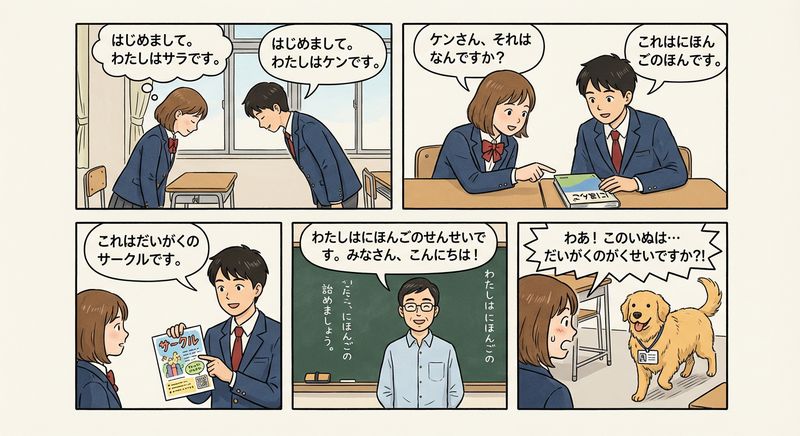

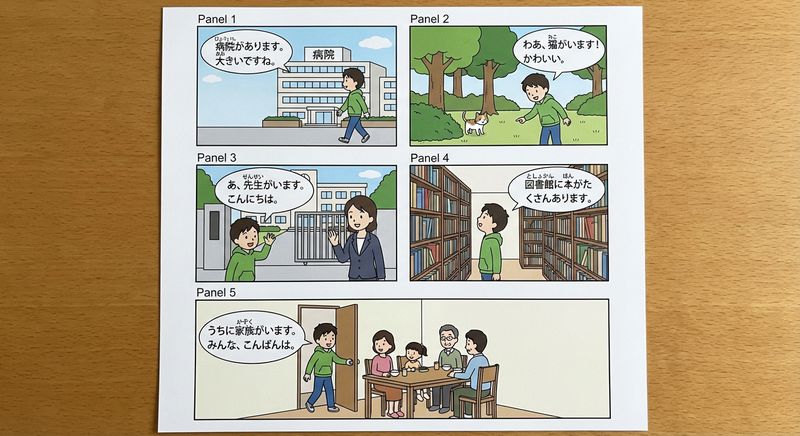

「~ませんか (e.g., コーヒーを飲みませんか。)」を使った5コマの漫画のアウトライン描写を書いてください。描写は、それぞれのコマごとに15-20語くらいの英語で書くようにして、全ての5コマの描写を一つのパラグラフで提示してください。漫画の描写は英語ですが、漫画の吹き出しは、日本語の教科書「げんき」のCh.1-Ch.03を勉強している学生にわかるようなレベルの日本語を使うようにしてください。 [Comic Outline Description] In the first panel, a student looks lonely while sitting alone at a cafeteria table during lunch break. A classmate notices this and walks over. Speech bubble: 「いっしょにランチをたべませんか。」 In the second panel, the first student smiles and nods, making room at the table. They begin eating together happily. Speech bubble: 「はい、たべましょう!」 The third panel shows them chatting after finishing lunch. One student pulls out two coffee cans from their bag. Speech bubble: 「コーヒーをのみませんか。」 The fourth panel depicts them enjoying coffee together, looking very friendly. Speech bubble: 「ああ、おいしいです。」 In the final panel, they are walking to their next class together, planning to meet again. Speech bubble: 「あしたもいっしょにべんきょうしませんか。」

See more examples of image prompts in my Amazon S3 folder: https://t-nagano.link/FunWithAI-DeepSeekComicOutline

Images were generated with Nano Banana (Imagen 4) with these prompts.

Sample Prompt (Nano Banana)

generate_images.py --model "gemini-3-pro-image-preview" --prompt "[Comic Outline Description] In the first panel, a student looks lonely while sitting alone at a cafeteria table during lunch break. A classmate notices this and walks over. Speech bubble: 「いっしょにランチをたべませんか。」 In the second panel, the first student smiles and nods, making room at the table. They begin eating together happily. Speech bubble: 「はい、たべましょう!」 The third panel shows them chatting after finishing lunch. One student pulls out two coffee cans from their bag. Speech bubble: 「コーヒーをのみませんか。」 The fourth panel depicts them enjoying coffee together, looking very friendly. Speech bubble: 「ああ、おいしいです。」 In the final panel, they are walking to their next class together, planning to meet again. Speech bubble: 「あしたもいっしょにべんきょうしませんか。」" [INFO] Generated image ....

🖌️ Images

See more images in my Amazon S3 folder: https://t-nagano.link/FunWithAI-Imagen4

DeepSeek (https://www.deepseek.com) was introduced in the U.S. in 2025 and offered a highly capable reasoning model for a significantly reduced cost. In my observation, DeepSeek outperforms in topics of Japanese language learning, probably because the model has been trained with a large number of Japanese language learning materials (DeepSeek was developed in China). For example, DeepSeek understands what kind of words and grammatical structures we should use just by saying "Use Japanese appropriate for students who are studying chapter 3 of the Genki textbook," etc.

Its reasoning model (R1) was extremely affordable (less than $0.01 for each query), and it was possible to produce a large number of text-based materials with it. As an initial project, I generated a large number of example sentences that I can use in my class. The generated output was appropriate for the students' level and its topics were diverse. To be honest, it generated much better example sentences than I make, since I tend to use the same topics/sentence patterns in my examples. Errors did happen, but they were rare (about 1 in 100-200).

Sample Prompt (DeepSeek R1)

「~ませんか (e.g., コーヒーを飲みませんか。)」を使った例文を、日本語の教科書「げんき」を使ってニューヨークのラガーディアコミュニティーカレッジで日本語を学習している学生のために50個作ってください。「げんき」のCh.1-Ch.03までの単語を使うようにして、その範囲内の単語だけの例文を作るようにしてください。トピックはニューヨークに関する場所やイベントを時折、使うようにしてください。出力はデータのみにして、「日本語の例文; 英語の訳」形式で出して、解説はつけないでください。 コーヒーを飲みませんか。; Would you like to drink coffee? 映画を見ませんか。; Would you like to watch a movie? セントラルパークで散歩しませんか。; Would you like to take a walk in Central Park? 図書館で勉強しませんか。; Would you like to study at the library? ニューヨークの美術館へ行きませんか。; Would you like to go to a museum in New York? 昼ご飯を食べませんか。; Would you like to eat lunch? 紅茶を飲みませんか。; Would you like to drink tea? 友達に会いませんか。; Would you like to meet a friend? タイムズスクエアを見ませんか。; Would you like to see Times Square? 音楽を聞きませんか。; Would you like to listen to music? 本を読みませんか。; Would you like to read a book? 公園でピクニックしませんか。; Would you like to have a picnic in the park? ニューヨークのパレードを見ませんか。; Would you like to watch a parade in New York? ケーキを食べませんか。; Would you like to eat cake? 水を飲みませんか。; Would you like to drink water? 映画館へ行きませんか。; Would you like to go to the movie theater? 学校で日本語を勉強しませんか。; Would you like to study Japanese at school? 自由の女神を見ませんか。; Would you like to see the Statue of Liberty? コーヒーショップで休みませんか。; Would you like to rest at a coffee shop? ニューヨークのコンサートへ行きませんか。; Would you like to go to a concert in New York? テレビを見ませんか。; Would you like to watch TV? 食事をしませんか。; Would you like to have a meal? 散歩しませんか。; Would you like to take a walk? ... [snap]

📄 Text/PDF

DeepSeek can generate an extremely large number of text-based outputs. There are overa 1,000 sentences for each target structure. See the all files (in text format) in my Amazon S3 folder: https://t-nagano.link/FunWithAI-DeepSeekSent

DeepSeek (https://www.deepseek.com) is particularly good at generating text for Japaense language learners (probably because DeepSeek, a Chinese LLM initiative, has access to a large amount of materials for Japaense language learners for training.

Here I tried to generate reading passages for each target grammar as well as for each Genki textbook chapter.

Sample Prompt (DeepSeek R1)

「~ませんか (e.g., コーヒーを飲みませんか。)」を使った50語程度の読み物を、日本語の教科書「げんき」を使って勉強している日本語を学習している学生のために作ってください。「げんき」のCh.1-Ch.03の単語を使うようにして、その範囲内の単語だけを利用して読み物を作るようにしてください。読み物は、ニューヨークのトピック、特にニューヨークのラガーディアコミュニティーカレッジで日本語を勉強している学生が興味があるようなトピック(アニメやゲーム、漫画など)を取り扱うようにして、5-6語程度のタイトルで始まるようにしてください。出力はデータのみにして、解説はつけないでください。 公園で本を読みませんかこんにちは。私は鈴木です。大学の学生です。友達の高橋さんがいます。今日、天気がいいです。高橋さんが「公園で本を読みませんか。」と言います。私は「はい、読みましょう。」と言います。公園へ行きます。公園は大きいです。木の下で本を読みます。本は面白いです。それから、お茶を飲みませんか。高橋さんは「いいですね。」と言います。楽しい午後です。

📄 Text/PDF

DeepSeek can generate an extremely large number of text-based outputs. See the all files (in text format) in my Amazon S3 folder: https://t-nagano.link/FunWithAI-DeepSeekPass

These are reading passages for each chapter of the Genki textbook. Beginning-level passages (Ch01-Ch05) tend to use many vocabulary items and structures that the chapters have not covered yet, but they can still be useful with some modifications/corrections. Those after Chapter 6 have a good balance of the target vocabulary items and structures and new items, which are often presented with sufficient context, and proficient readers will be able to make reasonable guesses.

Sample Prompt (DeepSeek R1)

日本語の教科書「げんき」のCh.3のトピック、文法項目、ボキャブラリーに沿った50語程度の読み物を、日本語の教科書「げんき」を使って勉強している日本語を学習している学生のために作ってください。「げんき」のCh.1-Ch.03の単語を使うようにして、その範囲内の単語だけを利用して読み物を作るようにしてください。読み物は、ニューヨークのトピック、特にニューヨークのラガーディアコミュニティーカレッジで日本語を勉強している学生が興味があるようなトピック(アニメやゲーム、漫画など)を取り扱うようにして、5-6語程度のタイトルで始まるようにしてください。出力はデータのみにして、解説はつけないでください。 学校での一日の勉強今日、私は朝から学校へ行きました。教室で友達と会いました。私たちは日本語の勉強をしました。先生が「教科書を読んでください」と言いました。私は声を出して読みました。友達は「質問してもいいですか」と聞きました。先生は「はい、どうぞ」と答えました。昼休みに、食堂でご飯を食べました。午後、図書館へ行って、本を借りました。とても楽しい一日でした。

📄 Text/PDF

DeepSeek can generate an extremely large number of text-based outputs. See the all files (in text format) in my Amazon S3 folder: https://t-nagano.link/FunWithAI-DeepSeekPass-2 I have also created images for each passage using Imagen3. See the image files in my Amazon S3 folder: https://t-nagano.link/FunWithAI-DeepSeekPass-3

Task-based Language Learning (TBLL) is an extremely engaging and effective approach to language teaching. However, the major challenge in implementing TBLL is the amount of time and energy required to build meaningful tasks that are relevant to the learning objective (e.g., target grammar or vocabulary) and to students' lives.

It turned out that DeepSeek (https://www.deepseek.com) can generate reasonably good tasks. We can also make the tasks specific to students' environments by adjusting prompts.

In my experience, it works best to generate a few (probably 4-5) different tasks by adjusting prompts -- at least one of them is good enough for actual classroom instruction.

Sample Prompt (DeepSeek R1)

「~ませんか (e.g., コーヒーを飲みませんか。)」を練習するtask-basedの課題を、日本語の教科書「げんき」を使って勉強している日本語を学習している学生のために作ってください。ニューヨークの大学や短大で勉強している学生のために、ニューヨークに関係するトピックを積極的に利用してください。「げんき」のCh.1-Ch.03までの単語を使うようにして、その範囲内の単語だけを利用するように心がけて下さい。タスクは、学生が実際に直面するような状況を設定するため、詳しいバックグラウンドやタスクで解決すべき問題について英語で詳しく述べて、その後に必要なボキャブラリーを提示し、指導のステップを3-5ステップで表示して下さい。出力はデータのみにして、日本語の単語や例文以外は英語で書いて、そしてタスクの名前、必要な教材、練習する言語スキル、必要な時間などを最初に書いて、タスクの詳細なステップを書いてください。解説はつけないでください。 ### Task-Based Activity: "Exploring NYC Together - Museum Invitation Challenge" **Task Name:** Weekend Museum Invitation Plan **Target Language Pattern:** ~ませんか (e.g., コーヒーを飲みませんか。) **Textbook Source:** Genki I, Chapters 1-3 **Target Learners:** Beginner Japanese students in New York colleges/community colleges **Materials Needed:** Whiteboard, Genki vocabulary lists (Ch.1-3), scenario cards, timer **Language Skills Practiced:** Speaking (invitations), listening (responses), vocabulary recall **Time Required:** 30-40 minutes ### Background Scenario (in English) You are a new student at a New York college and want to make friends while exploring the city. This weekend, you plan to visit the **American Museum of Natural History** (or a similar NYC landmark). Your task is to invite classmates to join you using Japanese. You must negotiate a day/time, suggest meeting points, and politely handle acceptances or refusals. The challenge: use only vocabulary and grammar from Genki Chapters 1-3. ### Key Vocabulary (from Genki Ch.1-3) **Nouns:** - コーヒー (coffee), おちゃ (tea), ばんごはん (dinner) - にちようび (Sunday), どようび (Saturday), あさ (morning), ごご (afternoon) - いま (now), あした (tomorrow), きょう (today) - せんせい (teacher), ともだち (friend), がくせい (student) - えいが (movie), ミュージアム (museum - loanword allowed in Ch.3) **Verbs:** - いきます (go), みます (see/watch), たべます (eat), のみます (drink), します (do) - ねます (sleep), おきます (wake up), べんきょうします (study) **Expressions:** - いいですね (That's good!), ちょっと... (a little... [for hesitance]) - なんようび? (What day?), なんじ? (What time?) ### Task Steps 1. **Preparation (5 minutes)** - Review the vocabulary list above. Write down 2-3 invitation sentences using "~ませんか" (e.g., どようびにミュージアムへいきませんか。). - Brainstorm how to suggest meeting at a specific time/place (e.g., えきであいませんか。). 2. **Role-Play Practice (15 minutes)** - Pair up. One student is the "inviter," the other is the "classmate." - Inviter: Propose a museum visit using "~ませんか." Include day, time, and activity (e.g., にちようびのごごにミュージアムをみませんか。). - Classmate: Respond using Ch.1-3 vocabulary-either accept (いいですね!) or politely decline (ちょっと...べんきょうします). - Swap roles and repeat with a different day/activity. 3. **Expansion and Negotiation (10 minutes)** - In pairs, plan details: - Decide where to meet (e.g., がっこうで、パークで). - Suggest an after-museum activity (e.g., コーヒーをのみませんか。). - Use "なんじ?" or "なんようび?" to ask questions. 4. **Group Sharing (5 minutes)** - Each pair shares their final plan with the class in simple Japanese (e.g., どようびのごごにミュージアムへいきます。それから、コーヒーをのみます。). 5. **Optional Challenge** - If time allows, try inviting a "busy friend" (played by the teacher) who declines twice before accepting-practice persistent but polite invitations.

📄 Text/PDF

DeepSeek can generate an extremely large number of outputs almost for free. See the all files (in text format) in my Amazon S3 folder: https://t-nagano.link/FunWithAI-DeepSeekTasks

Projects are extremely useful as student-centered homework and authentic assessment. Like tasks, however, it takes a considerable amount of time and energy to design effective projects.

It turned out that DeepSeek (https://www.deepseek.com) can generate reasonably good projects. Like tasks, it works best to generate a few (probably 4-5) different projects by adjusting prompts and at least one of them is good enough for actual classroom instruction.

Sample Prompt (DeepSeek R1)

「~ませんか (e.g., コーヒーを飲みませんか。)」を練習するプロジェクトを、日本語の教科書「げんき」を使って勉強している日本語を学習している学生のために作ってください。「げんき」のCh.1-Ch.03までの単語を使うようにして、その範囲内の単語だけを使うようにしてください。プロジェクトは、だいたい1時間程度で終わる範囲で、ニューヨークにある日本に関するお店や団体、日本語が使われている場所などを利用するようにしてください。場所は日本のウェブサイトなどのオンラインでもいいです。プロジェクトは、提出できるdeliverableが明確にあるようにしてください。出力はデータのみにして、日本語の単語や例文以外は英語で書いて、そしてプロジェクトの名前、必要な教材、練習する言語スキル、必要な時間などを最初に書いて、プロジェクトの詳細なステップを書いてください。解説はつけないでください。 **Project Name:** "NYCでさがそう!~ませんか Invitation Project" **Materials Needed:** - "Genki I" Textbook (Ch.1-3 vocabulary reference) - Internet access (for website research) - Worksheet (printed or digital) - Pen/Notebook or digital document **Language Skills Practiced:** - Grammar: ~ませんか (informal invitations) - Vocabulary: Genki Ch.1-3 only - Reading: Simple website navigation - Writing: Forming invitation sentences **Time Required:** Approximately 60 minutes --- **Detailed Project Steps:** 1. **Preparation (5 minutes)** - Review the "~ませんか" grammar point from Genki. - Review vocabulary lists from Genki Chapters 1, 2, and 3. - Open a web browser. 2. **Research Phase (25 minutes)** - Visit the following real websites based in or related to New York: a. **Mitsuwa Marketplace (Edgewater, NJ - near NYC)** website: Look for sections like レストラン (restaurant), カフェ (cafe), 本 (books), 食べ物 (food). b. **Japan Society (NYC)** website: Look for イベント (events), ビデオ (videos), クラス (classes). c. **Kinokuniya Bookstore (NYC)** website: Look for 本, 雑誌 (magazines), 文房具 (stationery). - Using **only vocabulary from Genki Ch.1-3**, identify 5 items/activities you find. Write them down in Japanese. *Example Finds: コーヒー、おちゃ、えいが、ほん、ノート、てがみ、サンドイッチ、ミーティング* 3. **Sentence Creation Phase (20 minutes)** - Using your 5 found items/activities, create 5 invitation sentences using the "~ませんか" pattern. - Each sentence must use **only vocabulary and numbers from Genki Ch.1-3**. *Example Sentences:* 1. きょう、コーヒーを飲みませんか。 2. あした、ほんを読みませんか。 3. 木よう日に、えいがを見ませんか。 4. ここで、サンドイッチを食べませんか。 5. にちよう日に、パーティーへ行きませんか。 4. **Deliverable Creation & Submission (10 minutes)** - Fill out the final worksheet with: A. The 5 items/activities you found (in Japanese). B. Your 5 original invitation sentences. C. One chosen invitation (from your 5 sentences) written neatly or typed for submission. - Submit your final chosen invitation sentence and the name of the website you used most to your instructor via email or the provided Google Form. **Deliverable Format for Submission:** - Student Name: - Website Used Most: - My Invitation: [One complete sentence using ~ませんか]

📄 Text/PDF

DeepSeek can generate an extremely large number of outputs almost for free. See the all files (in text format) in my Amazon S3 folder: https://t-nagano.link/FunWithAI-DeepSeekProjects

Although often overlooked, OpenAI's audio-generation (https://openai.com )- or text-to-speech (tts) - technology is a major asset. Before OpenAI's tts model, initially known as Whisper, numerous commercial and academic projects produced synthetic speech systems, but they all had noticeable accents that could alienate audiences. OpenAI's Whisper project applied large language model technology to generate synthetic speech, which outperformed previous models that attempted to emulate human phonetic and phonological patterns.

OpenAI first announced the tts-1 model in 2023 and later upgraded it to the gpt-4o-mini-tts model in 2024. As of this writing, the latest model remains gpt-4o-mini-tts (gpt-4o-mini-tts-2025-12-15).

OpenAI's gpt-4o-mini-tts offers 13 different speakers (voices) and supports audio instructions, with which you can make minor adjustments to the speech patterns. Below is a prompt example to generate speech samples.

Sample Prompt (ChatGPT tts)

for speaker in "alloy" "ash" "ballad" "cedar" "coral" "echo" "fable" "marin" "nova" "onyx" "sage" "shimmer" "verse"; do generate_speech.py --audioModel gpt-4o-mini-tts-2025-12-15 --audioSpeaker $speaker --audioInstructions "Accent: warm, refined, and gently instructive, reminiscent of a friendly instructor. Tone: Calm, encouraging, and articulate. Pacing: Deliberate, pausing often to allow the listener to follow instructions comfortably. Emotion: Cheerful, supportive, and pleasantly enthusiastic" --text "The quick brown fox jumps over the lazy dog. one, two, three, four, five, six, seven, eight, nine, ten. Sally sells seashells by the seashore. Six sleek swans swam swiftly south."; done [INFO] Audio has been generated... ....

🎵 Audio (speech samples)

Although these models are not specifically trained for Japaense, they tend to do very well with the Japanese speech. Below is the sample of Japaense text.

Sample Prompt (ChatGPT tts)

generate_speech.py "次の日本語の文章を音声に変更してください" --audioInstructions "Speak in Japanese. Accent: warm, refined, and gently instructive, reminiscent of a friendly instructor. Tone: Calm, encouraging, and articulate. Pacing: Natural speed. Make sure to pause for one or two seconds at the end of each sentence. Emotion: Cheerful, supportive, and pleasantly enthusiastic" --audioModel gpt-4o-mini-tts-2025-12-15 --audioSpeaker echo "音声チェックです。ただいま、マイクのテストを行っています。いち、に、さん、よん、ご、ろく、なな、はち、きゅう、じゅう。生麦、生米、生卵。隣の客は、よく柿食う客だ。ただいま、自然な速さと一定の音量で読んでいます。あいうえお、かきくけこ、さしすせそ。" [INFO] Audio has been generated... ....

🎵 Audio (speech samples)

The cost for OpenAI's tts is extremely affordable (about $0.01 per minute), which allows us to generate a large number of audio files as instructional materials.

Sample Prompt (ChatGPT tts)

generate_speech.py "次の日本語の文章を音声に変更してください" --audioInstructions "Speak in Japanese. Accent: warm, refined, and gently instructive, reminiscent of a friendly instructor. Tone: Calm, encouraging, and articulate. Pacing: Natural speed. Make sure to pause for one or two seconds at the end of each sentence. Emotion: Cheerful, supportive, and pleasantly enthusiastic" --audioModel gpt-4o-mini-tts-2025-12-15 --audioSpeaker echo "コーヒーを飲みませんか" "映画を見ませんか" "セントラルパークで写真を撮りませんか" "図書館で勉強しませんか" "メトロポリタン美術館へ行きませんか" ... [snip] [INFO] Audio has been generated... ....

🎵 Audio (speech samples)

There are a large number of audio files for practice sentences. See my Amazon S3 folder: https://t-nagano.link/FunWithAI-OpenAIAudio1

Eleven Labs (https://elevenlabs.io) specializes in AI-based speech synthesis and other audio-based services (such as transcription). In terms of quality, it probably surpasses recorded speech by non-professional humans - the audio emulates human speech perfectly and does not have any unintended interruptions such as external noise or coughing. You will have absolute control over speech synthesis and can add various extra-speech features by using tags such as [surprised] and [uninterested]. There are over 1,000 speakers to choose from, and you can also use your own voice as a speaker by uploading a short (2-3 min) speech sample.

Eleven Labs is probably the best speech synthesis service (a lot better than ChatGPT's tts), but it costs a lot more than other services. On average, Eleven Labs costs about $0.2-0.3 per minute, while the average cost of ChatGPT tts is about $0.01-0.02 per minute.

Eleven Labs offers a free subscription, which comes with some credits (sufficient for 10-15 min of speech synthesis).

Below are speech samples for Eleven Labs. Eleven Labs offers over 1,000 voices and an option to use your own voice, so these are just a fraction of the speech samples that you can generate with Eleven Labs.

Sample Prompt (Eleven Labs)

generate_speech.py --model-id "eleven_v3" --voice-id Ellen "音声チェックです。ただいま、マイクのテストを行っています。いち、に、さん、よん、ご、ろく、なな、はち、きゅう、じゅう。生麦、生米、生卵。隣の客は、よく柿食う客だ。ただいま、自然な速さと一定の音量で読んでいます。あいうえお、かきくけこ、さしすせそ。" [INFO] Audio has been generated... ....

🎵 Audio (speech samples)

I processed the audio for sample sentences again with ElevenLabs (see OpenAI Whisper above). The audio quality is significantly better than OpenAI Whisper.

Sample Prompt (Eleven Labs)

generate_speech.py --voice-id "Yui" --model-id "eleven_v3" "コーヒーを飲みませんか" "映画を見ませんか" "セントラルパークで写真を撮りませんか" "図書館で勉強しませんか" "メトロポリタン美術館へ行きませんか" ... [snip] [INFO] Audio has been generated... ....

🎵 Audio (speech samples)

There are a large number of audio files for practice sentences. See my Amazon S3 folder: https://t-nagano.link/FunWithAI-ElevenLabsAudio1

Eleven Labs offers a great deal of control over speech synthesis and you can make a dialogue among multiple different people (voices) too. Below is an example of the dialogue speech synthesis.

Sample Prompt (Eleven Labs)

generate_speech.py --model-id "eleven_v3" --dialogue-voice-map "Male1:Akira, Female1:Shizuka, Male2:Austin, Female2:Ellen" Female1: [warmly] こんにちは。たかしさん、お元気ですか。 Male1: はい、元気です。ゆかりさん、こちらは友達のマイクさんとサラさんです。 Female2: [curious] はじめまして。サラです。アメリカ人です。学生です。 Male2: はじめまして。マイクです。アメリカ人です。よろしくお願いします。 Female1: 私はゆかりです。日本人です。大学で日本語を勉強しています。 Male1: 私はたかしです。日本人です。学生です。 Female2: [thoughtful] あのう、日本語の本を読みませんか。 Male2: [surprised] 本ですか?いいですね。 Female1: [warmly] そうですね。一緒に図書館へ行きませんか。 Male1: ええ、行きましょう。 Female2: [laugh] ありがとうございます。 Male2: [curious] 図書館はどこですか。 Female1: 大学の近くです。 Male1: [warmly] じゃあ、一緒に行きましょう。 Female2: はい、お願いします。 [INFO] Audio has been generated... ....

🎵 Audio (speech samples)

There are some audio files generated by ElevenLabs in my Amazon S3 folder: https://t-nagano.link/FunWithAI-ElevenLabsDialogue

Last update: